It’s hard to write about Generative AI and Large Language Models without writing about capitalism. That’s the point of GenAI, isn’t it? To further the capitalist ideal of maximizing profit?

Certainly, that is what the LLM purveyors are selling us: lower your costs while creating many more outputs many times faster than mere human beings. The output is not especially good, but there is so much of it and it is so instant! Who cares about excellence when you can have seemingly infinite volume with fewer of those expensive human beings?

This is the moment where capitalism has lost the plot.

Market capitalism is a system that incentivizes people to maximize efficiency in pursuit of material wealth. It has been very effective in that aim. Economist Brad Delong estimates that an unskilled laborer in 1870 London would have produced roughly the equivalent of 5,000 calories in economic value each day. In other words, not enough to feed his family. Today, an unskilled worker in London produces the equivalent of 2.4 million calories a day — enough to feed her own family and several hundred others besides.

That tremendous leap forward in material well-being is in large measure the result of market capitalism and how it incentivizes corporations and individuals to apply technological progress in pursuit of profit. To be sure, we struggle mightily to distribute the resulting wealth as equitably as we should, but after many thousands of years of humanity, we no longer struggle with not having enough. Capitalism must surely be credited with an assist.

Today, at the societal level, we don’t need more of anything. Indeed, most of us struggle under the weight of too much. Too much carbon. Too much plastic. Too many ultra-processed calories. Too much endless, unfulfilling content in endless, unfulfilling social media feeds. (Capitalism gets an assist here too.)

Nevertheless, here we are, still blithely organizing our society around the pursuit of efficiency and material wealth. Except now we stand on the cusp of a new paradigm that will allow us to create nearly infinitely, with ever less human intervention.

What is a human being for?

Psychology professor Michael Inzlicht has coined the term “effort paradox” to capture this notion that effort is sometimes avoided and other times valued. He posits that effort is a mechanism for creating meaning and purpose. Only by expending effort can you feel a sense of mastery. Too, it is a form of social currency — a way to demonstrate your commitment to a group or a cause. Effort is also valuable as an antidote to boredom. People don’t actually like to do nothing for very long, and will seek out ways to spend effort once boredom sets in.

GenAI and LLMs undercut all of these positive attributes of effort. Knowledge workers who are required to integrate AI into their workflows bemoan the loss of meaning and purpose in their work. Human-human relationships are displaced by human-AI transactions. People are consigned to babysit the AI rather than drive the work themselves, representing a loss of agency and autonomy in favour of reaction and monotony.

From a capitalist perspective, none of that matters. The system is working as intended: seek profit, minimize the effort and cost, let the invisible hand take care of the rest. If that means people no longer write their own words, it is no different than when people stopped being blacksmiths. Mustn’t stand in the way of progress.

The struggle is real; the AI is not

If the LLMs do all the writing, what do we lose?

The written word on the page has value as output. But the messy, frustrating, difficult process of writing is not a problem to be solved. The inefficiency is a feature, not a bug. Setting aside questions of quality, relying on the output of an LLM robs you of the benefits that come through expense of your own effort. Your understanding will be surface-level. Any objections you run into will render you inert — unable to counter because you haven’t done the heavy lifting yourself. You won’t be motivated to see your ideas through because they are not really yours. The output will be forgotten and discarded as easily as it was acquired.

Language, writing, and creating are also mechanisms for discovery and decision-making. When you set out to write about, say, AI and capitalism, the ideas do not emerge fully-formed like Athena from the head of Zeus. Rather, the ideas take shape through thousands of small decisions about words and structure, each one clarifying, refining, defining. When you let GenAI do the work, you delegate most of those decisions*.

Delegation of decision-making is a radical departure from all the automation that has come before. Whatever technical wizardry we deployed, the decision-making still resided with the human beings who designed, built, and ran the machinery. Many decisions were taken away and hidden from front-line workers, but some human somewhere still made the decision before it was programmed into the machine. The GenAI models are different, because they are making all of those small decisions on our behalf, without us ever knowing what was considered and ultimately discarded.

We lose something real when that happens. Recent research surveyed workers who use GenAI at work at least once a week. The researchers found:

“While GenAI can improve worker efficiency, it can inhibit critical engagement with work and can potentially lead to long-term overreliance on the tool and diminished skill for independent problem-solving. ”

The kinds of work that workers did also shifted, with seeking, generating, and doing, displaced by verifying, combining, and supervising. The issue is that the cognitive skills are not switches you can turn on and off at will. We see that when people rely on GPS directions rather than building their own mental maps, the region of the brain associated with mapping literally shrinks. Cognitive skills that aren’t practiced and maintained will atrophy.

We need a certain amount of struggle — grappling with ideas, making trade-offs, doing hard things. It’s how we maintain and expand our capacity to think, and also how we figure out what we think. Written outputs are valuable and useful, but the process of creating them is what is essential to us as humans. We need effortful pursuits to flourish.

Human flourishing is the point

When capitalism was first emerging in the 18th century, there wasn’t enough food or shelter to go around. In that context, it seems preferable to have productivity gains from division of labour, even though it replaced fulfilling work for craftspeople with repetitive drudgery on an assembly line. “Not starving” is pretty foundational to human flourishing.

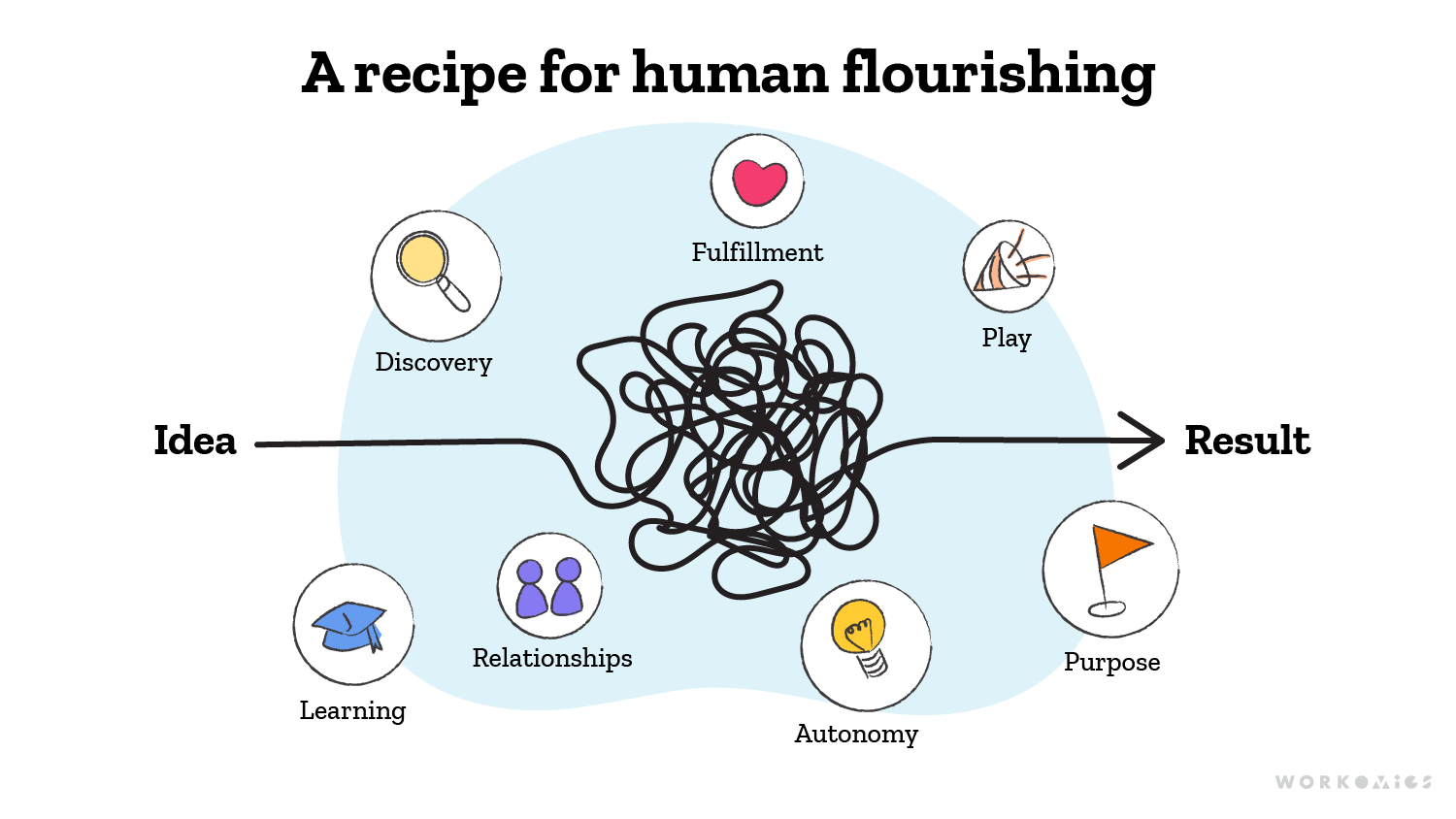

The world we live in now is very different. Mostly, we are applying GenAI to create “more” where we already have too much. If we prize efficiency and profit above all else, the LLMs seem inevitable. But the capitalist paradigm of efficiency and profit sprung from the trade-offs of a bygone era. The world today is already abundant in material wealth, and approximately no one thinks AI will help distribute it more equitably. LLMs maximize efficiency at a ludicrous scale, but in exchange, we forsake fundamentally human things like discovery, learning, autonomy, relationships, purpose, fulfillment.

We should be very sure the payoff is worth it.

Assessing the payoff will require corporations to be something other than profit-maximizing machines in the capitalist ideal — to grapple with their own version of the effort paradox. Sometimes, effort should be minimized; other times, not.

To wield AI effectively, organizations will need to have a real clarity of purpose — a reason to exist that goes beyond producing a particular output as profitably as possible. They will need to think deeply about when and how AI helps or hinders. Is near-term efficiency worth it, if the result is a long-term decline in the organization’s capacity to create, innovate, differentiate? If there is no one who can supervise the LLMs in 10 years time, because the AIs took the on-the-job learning opportunities away from junior employees? If the LLMs are commoditized and all your competitors can produce the same blandly average offerings?

Ultimately, the decision to leverage GenAI is not unlike the decision to outsource. There’s nothing wrong with it, when it’s a supporting role, in the areas where “adequate” is all you need. But if you outsource the core of your offerings, sooner or later your customers will realize you are just a middleman and cut you out.

Equally, there are some problems out there where we really should privilege efficiency above all else. If you’re using AI to develop new cancer drugs, go with God. I hope market capitalism makes you really rich because you invented new treatments and reduced human misery.

But there are all kinds of other problems where prioritizing efficiency and applying AI is to deeply misunderstand what it is to be human, what makes for a happy life. If you are using AI to call your parents for you, or write your guide to summer, or make your video game, or teach your course, then you have been led astray. When an AI makes these things, the best-case is a mid output that adds to the noise. It’s pointless. Even if there is—improbably—financial profit to be had, you have inflicted a net cost on society and robbed yourself of something meaningful.

But when a real live person makes these things, that’s a very different premise. Human beings are in the arena, connecting with each other, expanding their understanding of the world, making compromises, striving for better. It will be inefficient and effortful. The result might be mediocre or it might be profound. Either way, humans and humanity will have benefitted from the pursuit.

*Ted Chiang explores this idea in his New Yorker article “Why AI isn’t going to make art”

Our other ideas worth exploring

Balancing the pursuit of new

Organizations tend to focus on building new things. But there is a season to turn inwards, prioritizing and optimizing what already exists.

Figuring out Flexible Work

At Workomics, our work is flexible-by-design. Our policy is non-prescriptive on when work happens and how much of it you need to do.

Asking for Help

The asymmetry of asking for help, autonomous vs. dependent help-seeking, and what it means for organizational effectiveness.